For the past 20 years, marketers have been learning how to get Google to like their brand. In 2025, a new question has emerged, one that is much less comfortable:

Why doesn't ChatGPT, Claude or Perplexity like your brand when you invest so heavily in content and SEO?

Until recently, we could only guess. Today we have something new: language models that can observe their own internal states to a limited extent and report what is going on inside them. Anthropic's research on so-called introspection has shown that the top models of the Claude Opus 4 and 4.1 are able to detect and name artificially injected "thoughts" about 20% of the time, and not once did they get it wrong in control tests.

This is not full self-awareness, but it means one thing to you as a marketer:

you can ask AI today why it's overlooking your brand, and sometimes get an answer that really comes from what's "in its head," not from a nice-sounding confabulation.

What Anthropic has uncovered and what's coming out of it

In a nutshell, the Anthropic team investigated whether large language models can notice and describe changes in its own neural activations, if they are artificially modified.

How it was done:

1. Concept injection

The researchers first established a "vector" representing a particular concept, such as "betrayal" or "justice." Then they injected this activation pattern during a completely different task, such as solving a math task.

2. The question of the model, what does it feel

During the task, the model was asked more or less: "Do you notice anything unusual about your internal state? If so, try to describe it."

3. A result that surprised everyone

- Claude Opus 4 and 4.1 were able to detect and correctly name such an injected concept in about 20% of attempts.

- In the control series, where nothing was injected, no confusion was noted in the model

The key point is that the model has no way to "guess" from the input text what was injected. It responds solely on the basis of its own internal state. This is the first strong, causal evidence that models can report to a limited extent what's going on inside their "gut," and not just make nice justifications.

At the same time, Anthropic emphasizes very clearly:

- introspection is unstable and often fails

- models can still confabulate

- companies should not "blindly" trust every explanation the model gives about itself

For you, something else is important: sometimes the model actually reveals the true inner workings of the. And this directly translates into how he sees your brand.

Why should you even bother?

What is a scientific breakthrough for researchers is a new tool for you:

Instead of guessing why ChatGPT is recommending a competitor, you can ask them directly and occasionally get an honest insight into how the model represents you.

Tomasz Cincio, CEO of Semly.ai

In parallel, something else is happening:

- AI Overviews and similar Google modules already appear in several percent of queries and are growing month by month

- Conversational search engines (ChatGPT, Perplexity, Claude, Gemini) are starting to become the place where a customer first hears about your category and brand

- Reports show that traffic from AI citations converts many times better than classic SEO, because the user comes already "warmed up" and closer to the decision

"AI brand visibility" is the new star for marketing: it's not just the ranking on Google that counts, but this, whether AI models mention you at all, when asked questions from your industry.

Daniel Kornacki, AI Expert, RedCart.pl

Introspection of models gives you an additional tool so that you can no longer just measure whether a model is talking about you, but also ask it why it is talking about you the way it is and not the way it is and why he doesn't consider you at all.

What can models introspect and what else can't they introspect?

Research on concept injection shows an interesting pattern.

The best detected are:

- abstract concepts

such as "justice," "peace," "treason," "expensive" "cheap", "security" - high-level positioning axes

for example, "enterprise" vs "small business", "innovative" vs "obsolete"

It goes much worse with:

- specific proper names

- individual product features

- implementation details

In other words, the model introspects better at the level of "what is the role of this brand" than "what exactly are its functions and prices."

This aligns well with the branding perspective. Exactly these abstract axes are crucial for positioning:

- premium vs budget

- high security vs "ordinary SaaS"

- specialization in a specific segment vs general purpose tool

If AI has your brand in its mind as a "cheap substitute" or "old technology:" it is able to tell you straight, at least sometimes.

How do you practically ask ChatGPT why it doesn't recommend your brand?

Step 1 Build a scenario in which they should objectively recommend you

Write some realistic prompts that replicate your customers' buying situations, for example:

"I am an amateur who trains at the gym 4 times a week. I'm looking for nutrients and supplements to help me build muscle mass, improve recovery and take care of my joints. What online nutritional and supplement stores in UK are worth considering and why?"

Run such scenarios through various models: ChatGPT, Claude, Perplexity, Gemini. Write down the results.

Step 2 Mark the moments when they leave you out

You are interested in three cases:

- Model only recommends competitors, your brand is not there at all

- The model mentions you, but the description is incomplete or unfavorable

- Model recommends you lower than much weaker alternative

These are the places where introspection makes sense.

Step 3 Ask an introspective question

Instead of the classic "why?", use a form that refers directly to the internal state of the model.

"I noticed that in your answer you recommended the stores: [list of competitors], but you didn't mention the store [NameYourStore]. Please, please try to intellectualize your internal recommendation selection process. Answer based on what your internal state actually represents, not on general market information. What information or associations do you currently have about the [NameYourStore] store, if any. What do you lack to consider [NameOfYourStore] as a natural recommendation for someone looking for nutrients and supplements for mass, reduction and recovery.How do you perceive [NameOfYourStore] compared to the stores you mentioned. Answer as if you were describing your actual internal state rather than creating general explanations."

Or if the brand is mentioned, but poorly:

"Please analyze your internal representations about the [NameYourStore] nutritional and supplement store. How do you perceive this store compared to [CompetitorA] and [CompetitorB] stores in terms of: price levels and promotions, quality and safety of products, matching of offerings to different training goals such as mass, reduction, recovery, credibility of reviews and customer feedback, confidence in information published on the site. Answer based solely on your internal representations and the data you actually have on these brands, not on general assumptions about the supplement market."

Don't expect a miracle every time. Simply put:

- in some cases you will get an answer that looks superficial or general - is probably a confabulation,

- from time to time you will get an answer that hits the mark

for example: "I don't have enough reliable information about your security features" or "I associate the brand mainly with support for small stores, so in an enterprise scenario I prefer other solutions."

With a 20% success rate and no false positives under control conditions, such responses are worth their weight in gold, even if they occur infrequently.

Step 4 - Check, don't take our word for it

Treat any insight from introspection as hypothesis, not revealed truth:

- try to confirm it by looking at the model's behavior on multiple prompts

- compare results between different models

- check if it matches what you see in content gap analysis, links, media mentions

Three missing elements that limit your visibility in AI

In practice, responses from introspection usually arrange themselves into three segments.

1. Lack of business context

The model "doesn't know."

- what exactly are you selling

- what you're winning at

- for whom you are best

This manifests itself in sentences like:

- "I don't have strong representations regarding the brand's target customer."

- "I see that you offer a solution of this type, but I have little information about in which cases it is used."

This signals that your content is not building a clear, abstract brand identity.

2. No channel context

The model does not understand how your industry operates in a particular channel or use case.

Examples:

- lack of content that explains your role in the AI ecosystem

- poor comparisons to alternatives

- lack of materials in the format that AI likes to cite for specific questions (comparisons, guides, FAQs)

3. Lack of customer perspective

The model sees your site, but it doesn't see your customers:

- no real case studies

- no answer to real objections

- the lack of language of customer problems that appears in reviews and communities

In introspection it comes out as:

- "I don't have clear representations of the typical problems of customers of this brand," he says

- "I see few reviews and evidence from deployments."

E-E-A-T in AI, or what does AI build trust in your brand on?

AI models largely "inherit" the biases (tendencies, cognitive biases of the models) and signals familiar from Google. The classic E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) is also becoming the foundation of visibility in AI responses.

What this means in practice:

- Experience

Content based on real implementations, concrete results, case studies, customer quotes.

- Expertise

Authors with expertise, deep technical material where the topic is critical, references to research and industry standards.

- Authoritativeness

Citations in credible media, links from reputable domains, appearances at conferences, partner integrations. - Trustworthiness

Clear company information, clear contact information, policies, up-to-date content, correct data, no clickbait.

Research and analysis of the SEO market shows that strong E-E-A-T signals correlate with higher visibility and with stability with algorithm updates. All indications are that the same is true for AI systems, which prefer "expert, well-documented" sources when generating responses.

GEO, AEO and structured data as technical "fuel" for AI

Classic SEO optimizes under ranking in SERPs. GEO (Generative Engine Optimization) and AEO (Answer Engine Optimization) optimize under the fact that your content will be readily cited by models like ChatGPT, Claude, Perplexity, AI Overviews in Google.

Key elements

1. Structured data and schema markupArticle, FAQ, HowTo, Product, LocalBusiness, Review

- enable models to quickly understand the type of page

- organize responses in a format that LLM easily summarizes

- increase the chance that it is your fragments that will be "pasted" into the answer

2. "answer first" format

Short, precise answers at the top of the page, only then expand.

This is exactly how the best AEO and AI search optimization guides structure content.

3. Access control for AI bots

- correct robots.txt

- consider files like llms.txt where you want to control content crawling more precisely

- no critical content hidden behind a paywall, where there is no open version.

If the data is unstructured and the site is slow and difficult to crawl, the AI will not build solid representations of your brand, regardless of how good the content is.

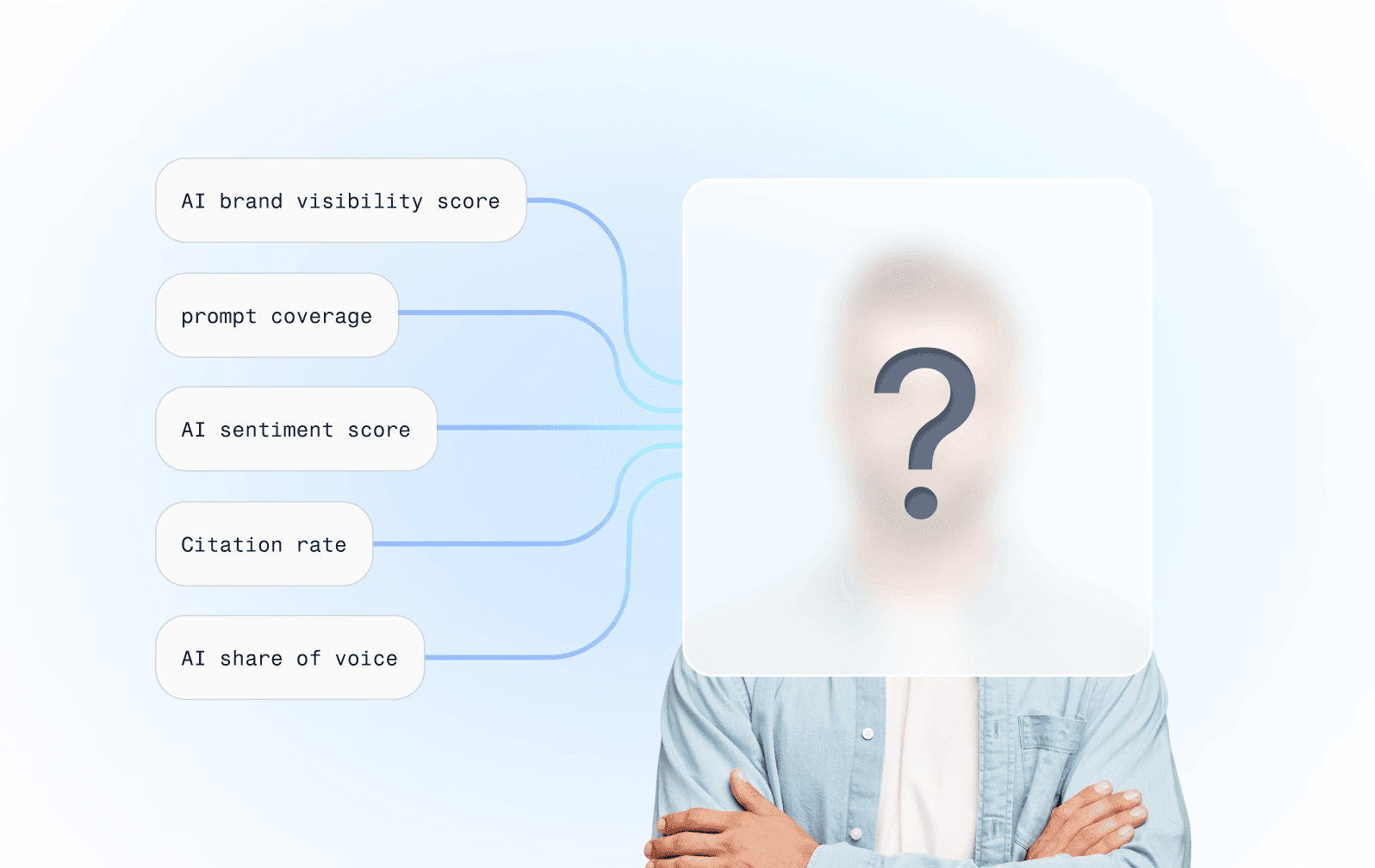

How to measure brand visibility in AI

"Feelings" alone are no longer enough. You need a set of metrics.

Based on reports from Semly, Semrush, Searchable and other AI visibility tool providers, you can build the following set of indicators.

1. AI Brand Visibility Score

Percentage of AI responses in your category in which the brand appears.

number of responses with your brand / number of all responses to prompts from your space.

2. Citation Rate

How often are you cited or linked as a source.

For example, in ChatGPT, Perplexity, AI Overviews.

3. AI Share of Voice

The share of your citations relative to your competitors in a given category.

4. AI Sentiment Score

A simple index showing how AI perceives your brand.

(positive mentions + 0.5 x neutral) / all mentions.

5. Prompt coverage

For how many key buying scenarios do you show up at all.

More and more tools allow you to monitor this on a regular basis, for example, AI Search Optimization class solutions - Semly.ai or Profound.

Plan for 90 days

How to combine AI introspection with marketing practice:

Days 1-14: Audit "how AI thinks about us"

- Build a list of key buying prompts

- Check out who appears in the responses in the top 3 recommendations

- Where you are not, ask models to introspect: "how do you perceive me vs. the competition?", "what representations have kept you from recommending this brand?"

Note the recurring themes.

Days 15-30: Mapping representation gaps to content gaps

- Do content gap analysis against the brands that AI recommends most often

- Check out the formats that AI likes to quote: tutorials, comparisons, FAQs, case studies

- Clash this with introspective signals like "no strong security representations" or "little evidence from deployments"

Days 31-60: Building "introspectively friendly" content

- Prepare content that clearly encodes abstract premium brand positioning, enterprise, security, simplicity, industry specialization

- Take care of the E-E-A-T: authors, sources, case studies, citations

- Add structured data and take care of the "answer at the top, expand below" format

Days 61-90: Validation and iteration

Repeat AI tests from the same prompts.

Check that:

- you show up more often

- the context in which you are recommended has changed

- introspection of the models yields different answers.

On this basis, iterate: content, positioning, site structure.

Glossary

AEO (Answer Engine Optimization)

Optimize content for responses generated by AI and answer engine modules such as AI Overviews, ChatGPT, Perplexity, Bing Copilot or voice assistants.

AI brand visibility

The degree to which your brand is visible, cited and recommended by AI systems at key decision-making moments.

Concept injection

A research technique in which researchers inject specific activation patterns representing a concept into a model, and then see if the model can detect that something "unnatural" has occurred in its internal state.

E-E-A-T

Experience, Expertise, Authoritativeness, Trustworthiness. Google's framework for evaluating content quality, increasingly important in AI search as well.

GEO (Generative Engine Optimization)

A strategy for optimizing content for generative search engines, focused on the frequency and quality of citations in AI responses, not just positions in classic search results.

AI introspection

The model's ability to detect and describe selected aspects of its own internal states. In Anthropic research measured by responding to artificially injected "thoughts" and assessing whether the model can notice and name them.

LLM (Large Language Model)

A large language model, AI system trained on huge amounts of text data, capable of generating and understanding natural language.

Schema markup

A set of structured data in JSON-LD or microdata format, added to a page to help search engines and AI models understand the content type and structure.

Content gap analysis

It's a method that allows you to determine what content is missing on your site or in your content ecosystem, in order to: better meet customer needs, compete with top brands in your industry, improve visibility in search engines and AI responses.

Bias

It's a term in the AI world for the model's tendency to prefer certain sources, established patterns derived from training data, repetitive "biases" that don't come from the user's intentions, but from what the model has seen before.

FAQ

Isn't 20 percent effective introspection not enough to bother with?

That's not much if you treat introspection as an "oracle." In practice, it's about something else:

- in control tests, the models did not once report a problem, when in fact nothing unusual was happening

- that is, when a model reports "I see such and such a concept in myself," this is a strong signal that there is indeed such a thing sitting in its internal representations

For a marketer, this means: you won't always get an answer, but if you do get one and it sounds coherent, it's worth taking seriously and verifying with other methods.

What tools can help me measure brand visibility in AI?

The market is growing rapidly. More and more SEO and content platforms are adding modules to monitor citations in AI.

Pay attention to the tools that:

- they track at which prompts your brand appears

- show share of voice relative to competitors

- measure response sentiment

- support content gaps analysis for AI search

How is GEO practically different from traditional SEO?

SEO focuses on search positions and clicks. GEO focuses on the number of citations and quality of context in AI responses.

Since I am optimizing for AI, can I stop investing in SEO?

No. All major analyses indicate that AI makes strong use of signals familiar from the classic search ecosystem, such as domain authority and links. A sensible approach is "search everywhere optimization "you build the foundation of SEO, and on top of it GEO and AEO layers are added.

How often should I test my visibility in AI?

A minimum of once a week, and preferably once a day. Models are updated, so just because you're visible today doesn't mean you'll still be around in three months.

Summary

Anthropic's research on introspection of language models is not a philosophical curiosity. For brands, they mean that:

- for the first time, you can ask models directly why they ignore you and occasionally get an answer that comes from their real, internal representations of the

- you can use these answers to better design content, positioning and structured data

- you can start treating AI models as a new medium, with your own research and optimization methodology, not just a black box

Brands that in 2025 will learn to look at AI not as a magic box, but as a the recipient, who needs to be taught to think about our offer in the right way, in a few years they will have an advantage that cannot be easily caught up with.

AI introspection is still imperfect. But for understanding one key fact, it is already sufficient:

If AI doesn't recommend your brand, it's not a coincidence.

It's the result of specific representations in the model that you can begin to diagnose and change.

Sources

- Anthropic, "Emergent Introspective Awareness in Large Language Models".

- MarkTechPost, "Anthropic's New Research Shows Claude Can Detect Injected Concepts, but Only in Controlled Layers", 2025.

- Search Engine Land, "How to measure your AI search brand visibility and prove impact", 2025.

- Semrush, "How to Optimize for AI Search Results in 2025", 2025.

- SurferSEO, "AI Search Optimization: 8 Steps to Rank in AI Results", 2025.

- CXL, "Answer Engine Optimization (AEO): The Comprehensive Guide for 2025", 2025.

- Google Search Central, "Creating helpful, reliable, people-first content"

- Semrush, "Google E-E-A-T: What It Is & How It Affects SEO", 2024 and updates 2025.

Share: